Intro

In my first week at ClassDojo, a small bug fix I was working on presented an intriguing

opportunity. A UI component was displaying stale data after an update due to a bug within a

ClassDojo library named Fetchers, a React Hooks implementation for fetching and managing

server state on the client. I couldn't help but ask “Why do we have a custom React Query

lookalike in our codebase?” A quick peek at the Git history revealed that Fetchers predate

React Query and many other similar libraries, making it our best option at the time. Four years

have passed since then and we now have several other options to consider. We could stick with

Fetchers, but why not use an established library that does the exact same thing? After

discussing the tradeoffs with other engineers, it became apparent that React Query was a better

fit for our codebase. With a vision set, we formulated a plan to migrate from Fetchers to React

Query.

The Tradeoffs, or Lack Thereof

Like most engineering problems, deciding between Fetchers and React Query meant evaluating

some tradeoffs. With Fetchers, we had complete control and flexibility over the API, designing it

directly against our use cases. With React Query, we would have to relinquish control over the

API and adapt to its interface. What ended up being a small downgrade in flexibility was a huge

upgrade in overall cost. Maintaining Fetchers involved time & effort spent writing, evolving,

debugging, testing, and documenting the library, and that was not cheap. Fortunately, React

Query supports all the existing use cases that Fetchers did and then some, so we’re not really

giving up anything.

As if that wasn't enough to convince us, Fetchers also had a few downsides that were crucial in

our decision-making. The first was that Fetchers was built on top of redux, a library we’re

actively working at removing from our codebase (for other unrelated reasons). The second, due

to the first, is that Fetchers didn’t support callbacks or promises for managing the lifecycle of

mutations. Instead, we only returned the status of a mutation through the hook itself. Often, prop

drilling would separate the mutation function from the status props, splitting the mutation trigger

and result/error handling across separate files. Sometimes the status props were ignored

completely since it wasn’t immediately obvious if a mutation already had handling set up

elsewhere.

// Fetchers Example

// Partial typing to illustrate the point

type FetcherResult<Params> = {

start: (params: Params) => void;

done: boolean;

error: Error;

}

// More on this later...

const useFetcherMutation = makeOperation({ ... });

const RootComponent = () => {

const { start, done, error }: FetcherResult = useFetcherMutation();

useEffect(() => {

if (error) {

// handle error

} else if (done) {

// handle success

}

}, [done, error]);

return (

<ComponentTree>

<LeafComponent start={start} />

</ComponentTree>

)

}

const LeafComponent = ({ start }) => {

const handleClick = () => {

// No way to handle success/error here, we can only call it.

// There may or may not be handling somewhere else...?

start({ ... });

};

return <button onClick={start}>Start</button>;

}

With React Query, the mutation function itself allows for handling the trigger & success/error

cases co-located:

// React Query

// Partial typing to illustrate the point

type ReactQueryResult<Params, Result> = {

start: (params: Params) => Promise<Result>;

}

// More on this later...

const useReactQueryMutation = makeOperation({ ... });

const RootComponent = () => {

const { start }: FetcherResult = useReactQueryMutation();

return (

<ComponentTree>

<LeafComponent start={start} />

</ComponentTree>

)

}

const LeafComponent = ({ start }) => {

// Mutation trigger & success/error cases colocated

const handleClick = async () => {

try {

const result = await start({ ... });

// handle success

} catch(ex) {

// handle error

}

}

return <button onClick={handleClick}>Start</button>;

}

Finally, Fetchers’ cache keys were string-based, which meant they couldn’t provide granular

control for targeting multiple cache keys like React Query does. For example, a cache key’s

pattern in Fetchers looked like this:

const cacheKey = 'fetcherName=classStoryPost/params={"classId":"123","postId":"456"}'

In React Query, we get array based cache keys that support objects, allowing us to target

certain cache entries for invalidation using partial matches:

const cacheKey = ['classStoryPost', { classId: '123', postId: '456' }];

// Invalidate all story posts for a class

queryClient.invalidateQueries({ queryKey: ['classStoryPost', { classId: '123' }] });

The issues we were facing were solvable problems, but not worth the effort. Rather than

continuing to invest time and energy into Fetchers, we decided to put it towards migrating our

codebase to React Query. The only question left was “How?”

The Plan

At ClassDojo, we have a weekly “web guild” meeting for discussing, planning, and assigning

engineering work that falls outside the scope of teams and their product work. We used these

meetings to drive discussions and gain consensus around a migration plan and divvy out the

work to developers.

To understand the plan we agreed on, let’s review Fetchers. The API consists of three primary

functions: makeMemberFetcher, makeCollectionFetcher, and makeOperation. Each is

a factory function for producing hooks that query or mutate our API. The hooks returned by each

factory function are almost identical to React Query’s useQuery, useInfiniteQuery, and

useMutation hooks. Functionally, they achieve the same things, but with different options,

naming conventions, and implementations. The similarities between the hooks returned from

Fetchers’ factory functions and React Query made for the perfect place to target our migration.

The plan was to implement alternate versions of Fetcher’s factory functions using the same API

interfaces, but instead using React Query hooks under the hood. By doing so, we could ship

both implementations simultaneously and use a feature switch to toggle between the two.

Additionally, we could rely on Fetchers’ unit tests to catch any differences between the two.

Our plan felt solid, but we still wanted to be careful in how we rolled out the new

implementations so as to minimize risk. Given that we were rewriting each of Fetchers’ factory

functions, each had the possibility of introducing their own class of bugs. On top of that, our

front end had four different apps consuming the Fetchers library, layering on additional usage

patterns and environmental circumstances. Spotting errors thrown inside the library code is

easy, but spotting errors that cascade out to other parts of the app as a result of small changes

in behavior is much harder. We decided to use a phased rollout of each factory function one at a

time, app by app so that any error spikes would be isolated to one implementation or app at a

time, making it easy to spot which implementation had issues. Below is some pseudocode that

illustrates the sequencing of each phase:

for each factoryFn in Fetchers:

write factoryFn using React Query

for each app in ClassDojo:

rollout React Query factoryFn using feature switch

monitor for errors

if errors:

turn off feature switch

fix bugs

repeat

What Went Well?

Abstractions made the majority of this project a breeze. The factory functions provided a single

point of entry to replace our custom logic with React Query hooks. Instead of having to assess

all 365 usages of Fetcher hooks, their options, and how they map to a React Query hook, we

just had to ensure that the hook returned by each factory function behaved the same way it did

before. Additionally, swapping implementations between Fetchers and React Query was just a

matter of changing the exported functions from Fetchers’ index file, avoiding massive PRs with

100+ files changed in each:

// before migration

export { makeMemberFetcher } from './fetchers';

// during migration

import { makeMemberFetcher as makeMemberFetcherOld } from './fetchers';

import { makeMemberFetcher as makeMemberFetcherNew } from './rqFetchers';

const makeMemberFetcher = isRQFetchersOn ? makeMemberFetcherNew : makeMemberFetcherOld;

export { makeMemberFetcher };

Our phased approach played a big role in the success of the project. The implementation of

makeCollectionFetcher worked fine in the context of one app, but surfaced some errors in the

context of another. It wasn’t necessarily easy to know what was causing the bug, but the surface

area we had to scan for potential problems was much smaller, allowing us to iterate faster.

Phasing the project also naturally lent itself well to parallelizing the development process and

getting many engineers involved. Getting the implementations of each factory function to

behave exactly the same as before was not an easy process. We went through many iterations

of fixing broken tests before the behavior matched up correctly. Doing that alone would have

been a slow and painful process.

How Can We Improve?

One particular pain point with this project were Fetchers’ unit tests. Theoretically, they should

have been all we needed to verify the new implementations. Unfortunately, they were written

with dependencies on implementation details, making it difficult to just run them against a new

implementation. I spent some time trying to rewrite them, but quickly realized the effort wasn't

worth the payoff. Instead, we relied on unit & end-to-end tests throughout the application that

would naturally hit these codepaths. The downside was that we spent a lot of time stepping

through and debugging those other tests to understand what was broken in our new

implementations. This was a painful reminder to write unit tests that only observe the inputs and

outputs.

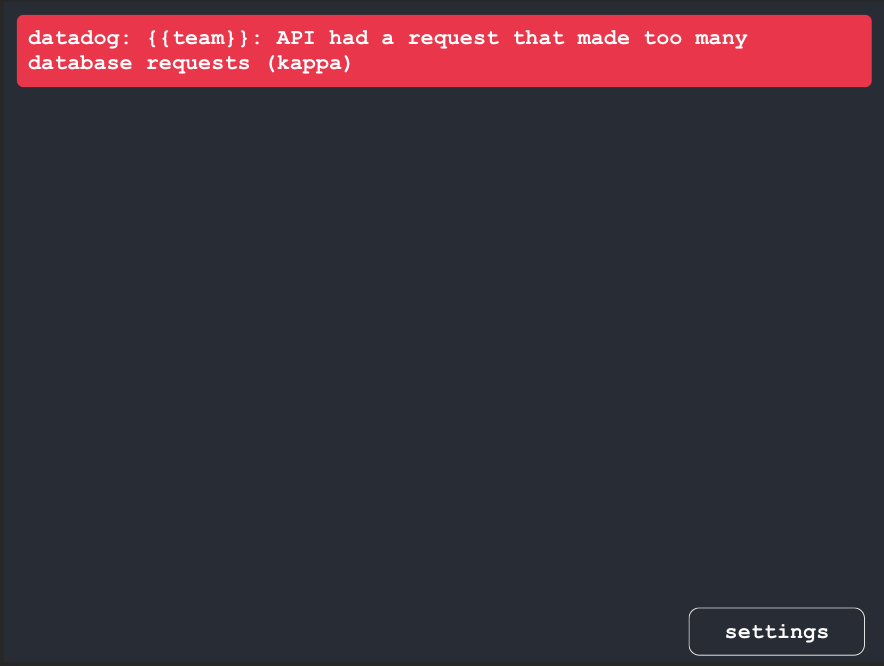

Another pain point was the manual effort involved in monitoring deployments for errors. When

we rolled out the first phase of the migration, we realized it’s not so easy to tell whether we were

introducing new errors or not. There was a lot of existing noise in our logs that required

babysitting the deployments and checking reported errors to confirm whether or not the error

was new. We also realized we didn’t have a good mechanism for scoping our error logs down to

the latest release only. We’ve since augmented our logs with better tags to make it easier to

query for the “latest” version. We’ve also set up a weekly meeting to triage error logs to specific

teams so that we don’t end up in the same situation again.

What's Next?

Migrating to React Query was a huge success. It rid us of maintaining a complex chunk of code

that very few developers even understood. Now we’ve started asking ourselves, “What’s next?”.

We’ve already started using lifecycle callbacks to deprecate our cache invalidation & optimistic

update patterns. Those patterns were built on top of redux to subscribe to lifecycle events in

Fetchers’ mutations, but now we can simply hook into onMutate, onSuccess, onError provided

by React Query. Next, we’re going to look at using async mutations to simplify how we handle

the UX for success & error cases. There are still a lot of patterns leftover from Fetchers and it

will be a continued effort to rethink how we can simplify things using React Query.

Conclusion

Large code migrations can be really scary. There’s a lot of potential for missteps if you’re not

careful. I personally believe that what made this project successful was treating it like a refactor.

The end goal wasn’t to change the behavior of anything, just to refactor the implementation.

Trying to swap one for the other without first finding their overlap could have made this a messy

project. Instead, we wrote new implementations, verified they pass our tests, and shipped them

one by one. This project also couldn’t have happened without the excellent engineering culture

at ClassDojo. Instead of being met with resistance, everyone was eager and excited to help out

and get things moving. I’m certain there will be more projects like this to follow in the future.